by Rik Farrow

Toward the end of 2001, the programmers for the Google search engine

added the ability to index several additional binary file types. Google

could already search Postscript PDF files, but some people considered

that being about to search for fields within an Excel spreadsheet file

a bit over the top.

The sad truth is that if you keep sensitive files on any Web server,

you are inviting people to view or copy those files. And not just

Web servers, either. FTP servers can also be indexed by automated

scanning tools, similar to Web indexing robots.

The worst offenders so far have been e-commerce sites, where it is

common to find database files with credit card info. Any information

that you must keep confidential cannot be stored on any public

network service.

The advent of Web searching tools, like AltaVista, added a new way

to find systems to attack. Through the use of a text-based search,

attackers could look for files containing the string "root:" in

hopes that it would be a real, UNIX, password file containing password

hashes that could be supplied to a password cracker. While this

may seem absurd, it was actually quite common in the early days of

the Web, when many people set up FTP servers in parallel with Web

servers.

Most Web searching tools "walk" through Web sites, starting with

the home page, and following all the links found there. If there

is a file named robots.txt, polite search engines suspend their

search at once. Search engines may also use the indexing features

of most Web servers to find pages that are hidden, that is, do not

have links pointing to them.

Of course, Web searching robots don't have to be polite. Search

engines designed to collect email addresses for use in spam will

ignore robots.txt files, for example, and may try directory names

in attempts to invoke indexing.

Both Apache and IIS have mechanisms for disabling directory listing.

You can do this using the IIS administrative tools, and via the

configuration files for Apache. For example, under Apache, by

setting "Options None", directory listing is implicitly disabled.

Directory listing gets enabled under Apache by adding "indexing"

or "fancy-indexing" directives in the httpd.conf file, or in

.htaccess files within specific directories under the document

root.

And Web servers are not the only servers indexed. There are people

running software that search FTP servers as well. These scanners

first probe for the existence of an FTP server (a service listening

at port 21/TCP), attempt an anonymous login, and if successful, use

the directory listing and change directory commands to list all directories.

If you think that files that you have stored on anonymous FTP servers

are safe because you have never advertised their presence, think again.

When Google added the ability to index several new filetypes, including

Microsoft Office, PostScript, Corel WordPerfect, Lotus 1-2-3, and others,

some people got alarmed (see Resources). Of course, this is silly, like

thinking that you can prevent your bicycle from being stolen from your

front porch by draping a sheet over it. While the ability to use

powerful search engines, like Google, to search for specific patterns

is like having someone provide a bicycle thief with a list of porches

with "hidden" bicycles, complaining about Google is not the way to

protect your assets.

Sensitive information does not belong on public network servers. The

most egregious cases include e-commerce sites, often running IIS, that

include an MS-SQL database of customer information on the same Web

server. The most common explanations for doing this that I hear is

that it is more convenient to do so, and the performance is better.

While it may be convenient having the database file on the same server,

it is like using the sheet to "protect" the bicycle. The second

argument is not even true.

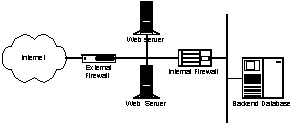

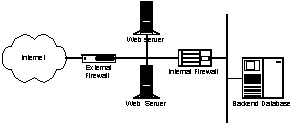

The correct way to store sensitive information that may be accessed

through Web servers is to store this information on an internal

server protected by a firewall (see diagram). The internal server performs its

own authentication, and should also include code to check that any

queries made are within the boundaries expected. For example, a

customer might be able to query his or her own account for shipping

information, but not some other customer's account. In

some cases, you might want to write a proxy designed to perform the

authentication and query checking features, if your database or

backend server cannot do this for you.

Splitting the Web server front end from the backend server is a little

harder to do. And that is the usual argument for not doing so, along

with whining about performance issues. For most sites, the internal

network runs at (at least) 10 Mbs, whereas the Internet connection is

some fraction of this speed, so complaining about network bottlenecks

is a specious argument. And splitting the front end from the back end

servers means that there are now two systems for dealing with

requests.

The only places where this is not true are hosted sites that actually

pay for Ethernet-level (and faster) connectivity. Even these sites need to use

two different systems for the servers, with a firewall in between. Both

servers should be managed over VPN or other encrypted links, such as

SSH. This will cost more, as the hosting site will charge additional

fees for the use of more rack space, but is well worth it.

Another common arguement used by people who want to put sensitive

information on a public Web server is that the information will

not be accessible to the Web server. Hiding information this way

has not been at all reliable, especially on IIS servers, where there

have been many exploits for accessing files not beneath the Web root

directory. Even the much more secure Apache Web server has had

bugs involving the ability to list directories outside of the server

root.

UNIX and Linux systems do have a capability, called change root, for

running a process with limited view of the local file systems. There

have been exploits for breaking out of a change root environment, making

this technique, while safer, still unsafe. One version of UNIX,

FreeBSD (www.freebsd.org) includes an enhanced change root, called

a jail, that provides even more security. While the FreeBSD jail

is stronger than the standard change root, I still recommend that

you not take chances, and use frontend and backend servers. A

single mistake here may mean disaster.

After listening to yet another person complain about having to split

their Web and database servers into two, more secure pieces, I decided

to ask around. I was fortunate enough to be at the USENIX Association's

(www.usenix.org) annual LISA (Large Installation System Administration)

conference, so there were plenty of people to ask.

I started with Curt Freeland, who teaches an e-commerce class at the

University of Norte Dame. Freeland stated that having two servers,

separated by a firewall, is the only way safe to do e-commerce.

But perhaps that is too "ivory-tower" for some people. So I asked

William LeFebvre, a senior system administration for Turner Broadcasting.

LeFebvre works with a team of sysadmins to manage the Web farm for

over twenty different domains, including cnn.com. The average hit

rate during the US working day is 85,000 hits per minute, with peak

periods above 300,000 hits per minute. The news room needs to be

able to post new Web pages instantly, although this takes as long as

one minute in reality. And, of course, security is a concern here, as

CNN does not want just anyone posting Web pages that are viewed by

thousands every minute.

Not surprisingly, CNN.com does use backend servers. Multiprocessor

Solaris systems sit beyond load balancers, handle requests for pages,

but get those pages from backend servers. So even in an environment

that makes 99.999% of Web sites look like small fry, the split between

front and back end servers is honored--in this case, largely for

performance and manageability reasons, and only secondarily for

security purposes.

While you might feel that you don't have the resources of Turner

Broadcasting at your site, I really do want you to protect your

sensitive data. Your Web server's task is to project a pretty

face for your site, one that is easy to use, while insulating your

sensitive data. Scripts or programs on your Web server interface

with your protected backend server, keeping your sensitive data

as safe as possible. All the popular database engines, whether you

use Microsoft, Oracle, Informix, or the free MySQL support queries

over the network, so you really don't have much of an excuse.

Credit card information is the single, most commonly sold data on

the information black market today. And any information that has

value to you likely has value to someone else as well. Protect that

data, and not by complaining that some search engine has indexed it.

Filetypes searched by Google:

http://www.google.com/help/features.html#pdf

Article about Google's new indexing/search features:

http://news.cnet.com/news/0-1005-200-7946411.html?tag=prntfr

Example of failure in IIS to prevent directory listing:

http://www.securiteam.com/windowsntfocus/2BUQGRFS3S.html

How the FreeBSD jail works:

http://docs.freebsd.org/44doc/papers/jail/jail-4.html#section4

Breaking out of the UNIX/Linux chroot() jail:

http://www.bpfh.net/simes/computing/chroot-break.html

Figure 1: The external firewall limits access to the Web servers to

just the HTTP (and perhaps HTTPS) protocols. The internal firewall

limits access from the Web servers to the port on the database

server for making SQL queries. If possible, the internal firewall

uses an SQL proxy to check the queries for correctness.

Figure 1: The external firewall limits access to the Web servers to

just the HTTP (and perhaps HTTPS) protocols. The internal firewall

limits access from the Web servers to the port on the database

server for making SQL queries. If possible, the internal firewall

uses an SQL proxy to check the queries for correctness.

Figure 1: The external firewall limits access to the Web servers to

just the HTTP (and perhaps HTTPS) protocols. The internal firewall

limits access from the Web servers to the port on the database

server for making SQL queries. If possible, the internal firewall

uses an SQL proxy to check the queries for correctness.

Figure 1: The external firewall limits access to the Web servers to

just the HTTP (and perhaps HTTPS) protocols. The internal firewall

limits access from the Web servers to the port on the database

server for making SQL queries. If possible, the internal firewall

uses an SQL proxy to check the queries for correctness.